An Open Letter to Google: Please Enhance the Transparency in Image Search Results

As a leading GenAI startup specializing in AI-powered creative tools for content creators, we feel compelled to address an important issue affecting the Internet: the need for clear identification of AI-generated content in search results.

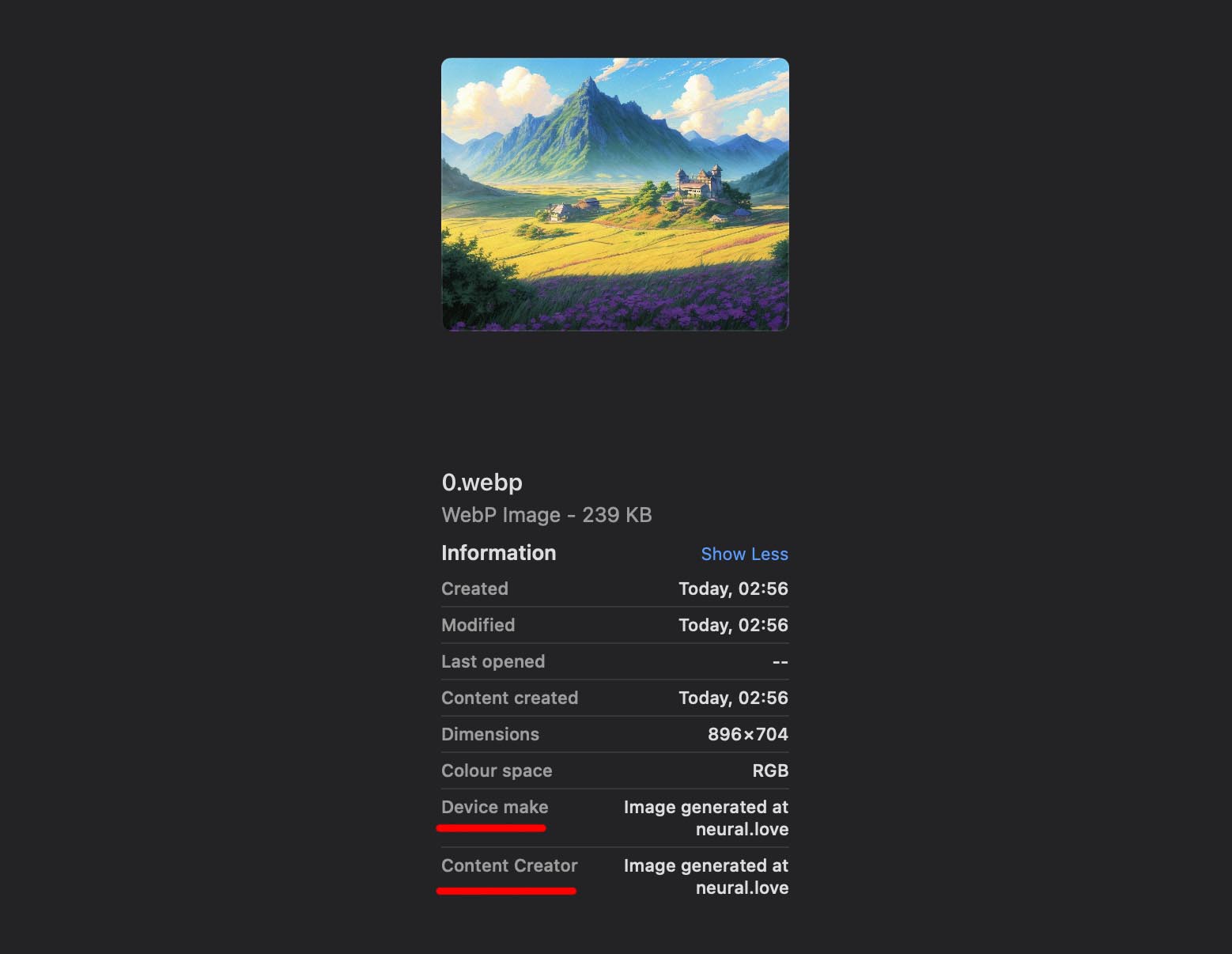

At neural.love, GenAI transparency is at the core of our ethos. We’ve implemented several measures to ensure our users and the public can easily identify AI-generated images:

- Embedded EXIF data indicating neural.love as the source (EXIF data refers to metadata embedded within image files that can include information like the source and creation details)

- Visible watermarks on all generated images

- Clear disclaimers about potential AI inaccuracies

- Prominent headers identifying AI-generated content. Example

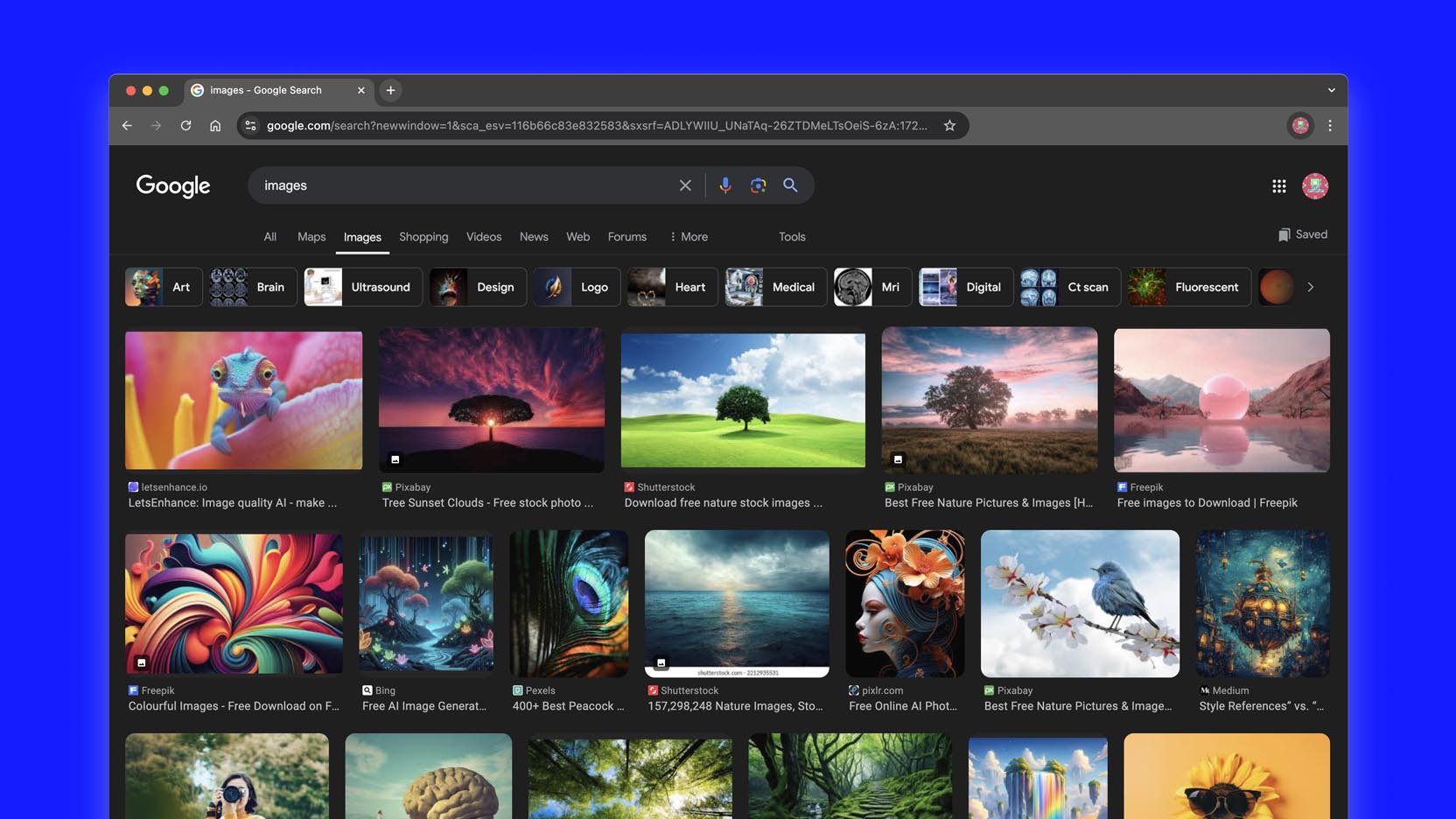

The Challenge Google Images Users Face

As advocates for responsible AI integration, we are deeply concerned about the current state of image search results and its impact on internet users. Despite efforts by companies like ours to clearly label AI-generated content, search engines, including Google Images, do not consistently differentiate between AI-generated and human-created images. This lack of distinction presents several challenges for users:

Misinformation Risk: Users may unknowingly encounter and share AI-generated images as if they were authentic photographs, potentially spreading misinformation.

Educational Confusion: Students and researchers relying on image searches for factual information may be misled by realistic AI-generated content.

Creative Attribution Issues: Artists and photographers may find their work mixed with AI-generated images, complicating attribution and recognition.

Erosion of Trust: As users become aware of unlabeled AI content in search results, it may lead to a general distrust in the reliability of image search tools.

A Case in Point

This recent example shows AI-generated “baby peacocks” appearing alongside real photographs in search results. Without proper labeling, users might easily mistake these AI creations for authentic images, leading to misconceptions about animal appearances and biology.

Our concern stems from a commitment to Internet transparency and user empowerment: Internet users deserve to make informed decisions about the content they consume and share. By addressing this challenge, we can collectively contribute to a more trustworthy and transparent online environment.

Our Proposition

As stakeholders in the AI creative industry, we propose a collaborative solution:

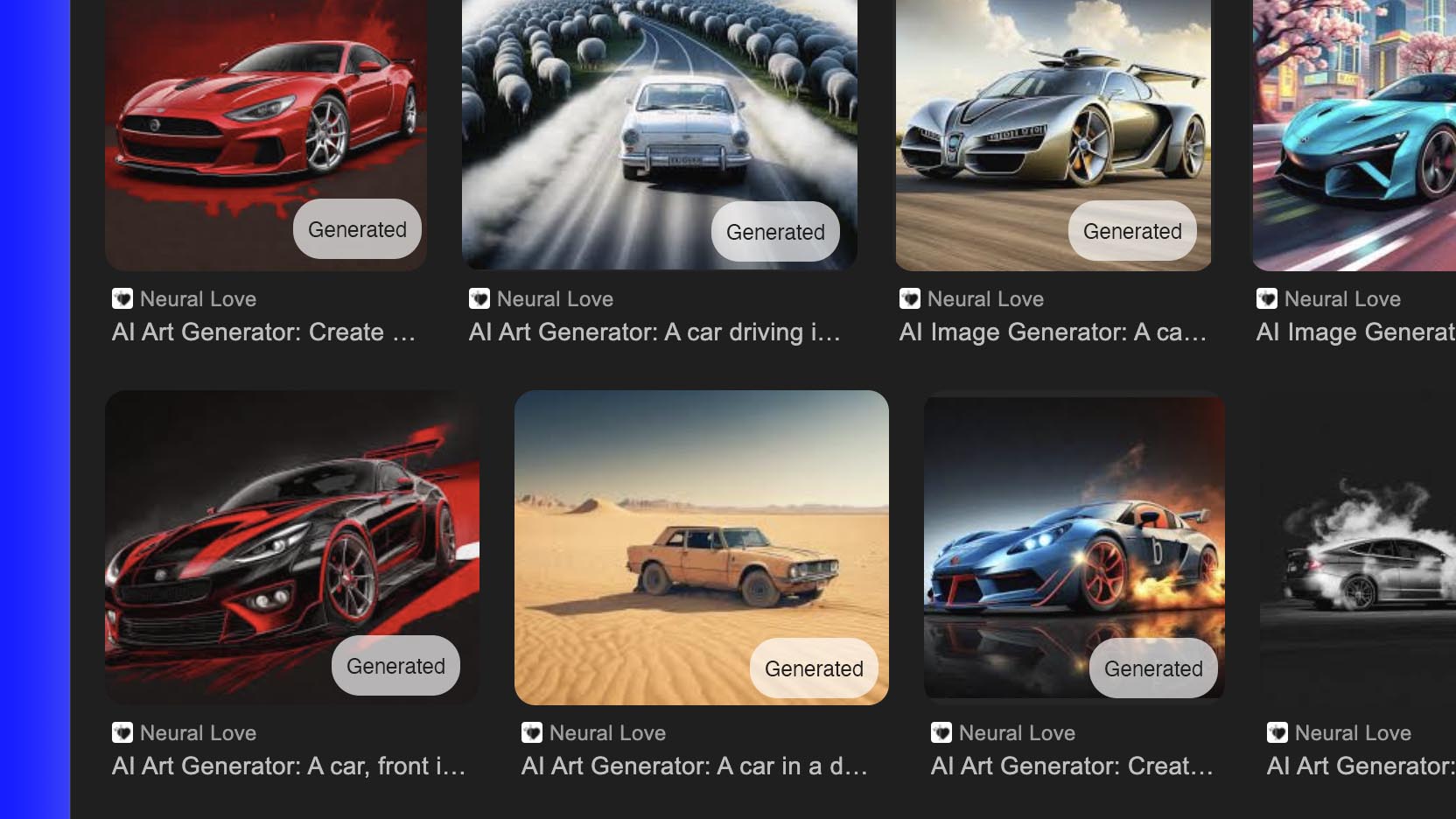

- AI Content Labeling: Implement clear labels for AI-generated images in search results. Instead of waiting for the platform to adopt the C2PA (Coalition for Content Provenance and Authenticity) standard, proactively read the EXIF data (metadata embedded within image files) from titles or page descriptions on Google’s side. If it is clearly stated that the content is AI-generated, label this content accordingly.

Quick-and-ugly-UI concept:

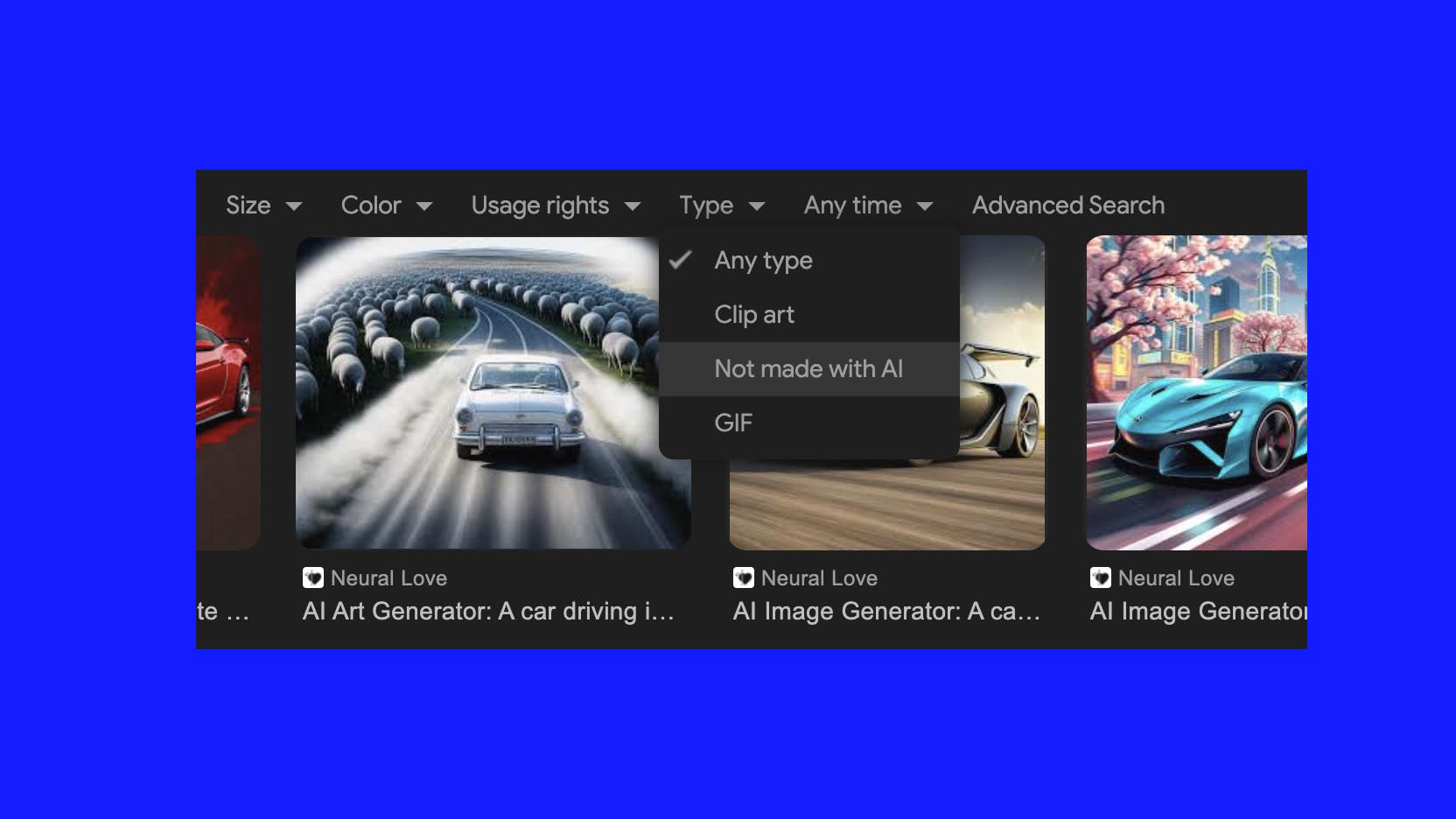

- GenAI Filtering Out Options: Provide users with the ability to filter out or specifically view AI-generated content. Recognizing that not all internet users appreciate Generative AI, this feature would allow for a more personalized and trustworthy search experience.

Quick-and-ugly-UI concept:

The Path Forward

We believe that by working together, tech giants like Google and AI startups can create a more transparent and trustworthy digital environment. This approach would not only benefit users but also support the responsible development and integration of AI technologies in creative fields.

We don’t think Google will notice this later due to its size, but anyway we invite Google and other search engines to engage in a dialogue about implementing these suggestions and are open to help with any technical adjustments on our side as well.

Let’s collaborate to ensure that the incredible potential of AI in creative fields is realized responsibly and transparently for every type of user: who loves GenAI or who hates GenAI.

Denis Shiryaev, neural.love CEO